by James Wallace Harris, 2/9/26

“Enshitification” is the trendy catchword of the moment. Cory Doctorow coined this handy term and describes what it means in his latest book, Enshittification: Why Everything Suddenly Got Worse and What to Do About It. However, I don’t think you need to read the book to get the idea. At a minimum, just listen to the interview with Doctorow and Tim Wu below on the Ezra Klein show titled “We Didn’t Ask for This Internet“:

Tim Wu covers similar ground in his book The Age of Extraction.

For my purposes, I use both terms to point to a specific kind of corporate greed that’s making our lives miserable. We could use both terms in this sentence: The relentless extraction of wealth is leading the enshitification of society.

Cory Doctorow uses the Internet to illustrate the process. Every program, app, or site begins life doing something wonderful for users. Often, their creators promise to always keep their users’ best interests at the core of their business model. But as time goes on and they need to keep making more money, they forget that promise. Eventually, they will do anything to get more users and more money.

Tim Wu models his term on the evils of private equity and similar practices. For example, in the interview, Wu gives this evil example:

In America, hospitals preferentially hire nurses through apps. And they do so as contractors. Hiring contractors means that you can avoid the unionization of nurses. And when a nurse signs on to get a shift through one of these apps, the app is able to buy the nurse’s credit history.

The reason for that is that the U.S. government has not passed a new federal consumer privacy law since 1988, when Ronald Reagan signed a law that made it illegal for video store clerks to disclose your VHS rental habits.

Every other form of privacy invasion of your consumer rights is lawful under federal law. So among the things that data brokers will sell to anyone who shows up with a credit card is how much credit card debt any other person is carrying, and how delinquent it is.

Based on that, the nurses are charged a kind of desperation premium. The more debt they’re carrying, the more overdue that debt is, the lower the wage that they’re offered, on the grounds that nurses who are facing economic privation and desperation will accept a lower wage to do the same job.

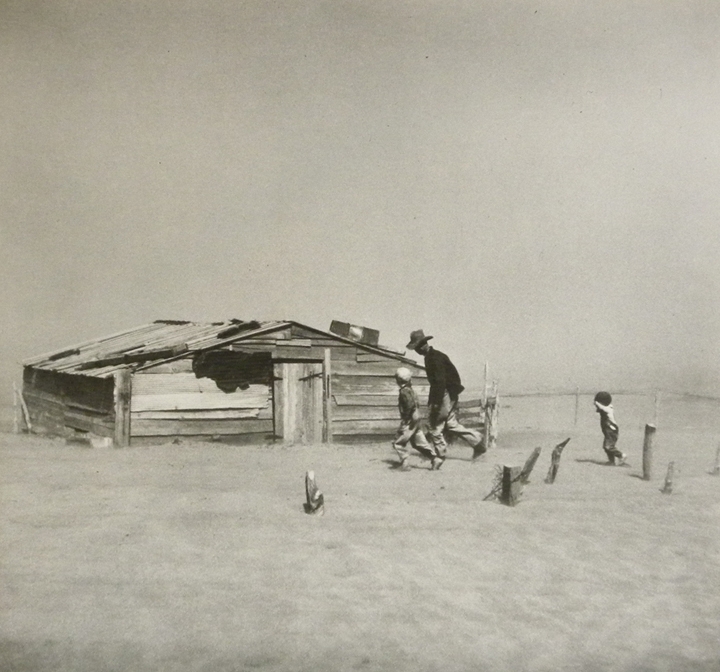

Now this is not a novel insight. Paying more desperate workers less money is a thing that you can find in, like, Tennessee Ernie Ford songs about 19th-century coal bosses. The difference is that if you’re a 19th-century coal boss who wants to figure out how much the lowest wage each coal miner you’re hiring is willing to take, you have to have an army of Pinkertons who are figuring out the economic situation of every coal miner, and you have to have another army of guys in green eye shades who are making annotations to the ledger where you’re calculating their pay packet. It’s just not practical. So automation makes this possible.

Doesn’t that sound like a cross between Nineteen Eighty-Four and the way China monitors its citizens? Wu is seeing how the extraction of wealth is doing something just as evil, but we could call it enshitification too.

Another example, this time from my New York Magazine subscription, “Body Cam Hustle” is about how people are making money off of videos of drunk drivers taken by the police. States enacted laws requiring police to wear body cameras to gather evidence and protect the innocent. The Internet went from promoting cute cat videos to scenes of personal shame. To show how society is also just as corrupt, audiences prefer seeing women being arrested.

I doubt I need to give any more examples, we all instantly recognize the genius of coining the word enshitification.

Cory Doctorow and Ezra Klein recall fond memories and hopes the Internet gave them when they were young. But it seems the Internet turns everything to shit eventually.

Does every sucky thing that depresses us most today connect to the Internet?

And more importantly, can we fight enshitification?

One area where I noticed people fighting back is with subscriptions. Tim Wu says subscriptions are the new, and more efficient, method of extraction. People are switching to Linux, free and open source software, unsubscribing from cloud storage, and going back to DVDs, CDs, and LPs.

Other people are taking up analog hobbies like sewing, gardening, woodworking, cooking, and handicrafts. Young people feel they are embracing the hobbies their grandparents pursued.

And other people are buying local rather than ordering online.

On the other hand, millions are adopting AI and racing full steam ahead into a dark Blade Runner-like cyberpunk future.

Does running from the clutches of Microsoft or Apple into the arms of Linux really help us escape enshitification? If Facebook and X are evil, does it make them less evil to access from Fedora and use the Brave browser? (I’m writing this post from Linux, and it’s been a struggle not to use all my favorite software tools on Windows.)

Would we be happier if we shut off the Internet and went back to televisions with antennas? I’ve contemplated what that would be like. My initial fear is that it would be lonelier. I don’t know why. I have many friends I see regularly. I guess the hive mind feels more connected.

I think we like to share. To communicate with like-minded people regarding our specific interests. Before the Internet, I was involved with science fiction fandom. I published fanzines, belonged to Amateur Press Associations (APAs), was part of a local science fiction club, and went to conventions.

I suppose I could regress.

But do people do that? Shouldn’t we figure out how to move forward and solve our enshitification problems? But how?

What if we split the internet into two segments? We keep the existing Internet, and create a new one that requires identity verification. To get a login would require visiting an agency in person and providing proof of your identity. Like when we got Read IDs. But also connect that identity to three types of biometric data. The login to the new Internet would have to be absolutely foolproof, otherwise people wouldn’t trust it.

I know this sounds scary and dangerous, but we’re already doing this piecemeal. Both corporations and criminals already know who we are.

Would people behave better on the Internet if they knew everyone knew exactly who they were? I assume that with such tracking of real identities, it would be almost impossible to rip people off since all activity would have a well-documented trail.

For this to work, corporations would have to be just as open and upfront. They would have to make all their log files public. So any individual could examine all the ways they are being tracked.

Is a much of enshitification due to anonymity and hidden corporate practices?

What if everything we did on the Internet was out in full sunlight?

I have no idea if this would help. It could make things much worse. But isn’t everything already getting much worse?

JWH