by James Wallace Harris, 4/21/24

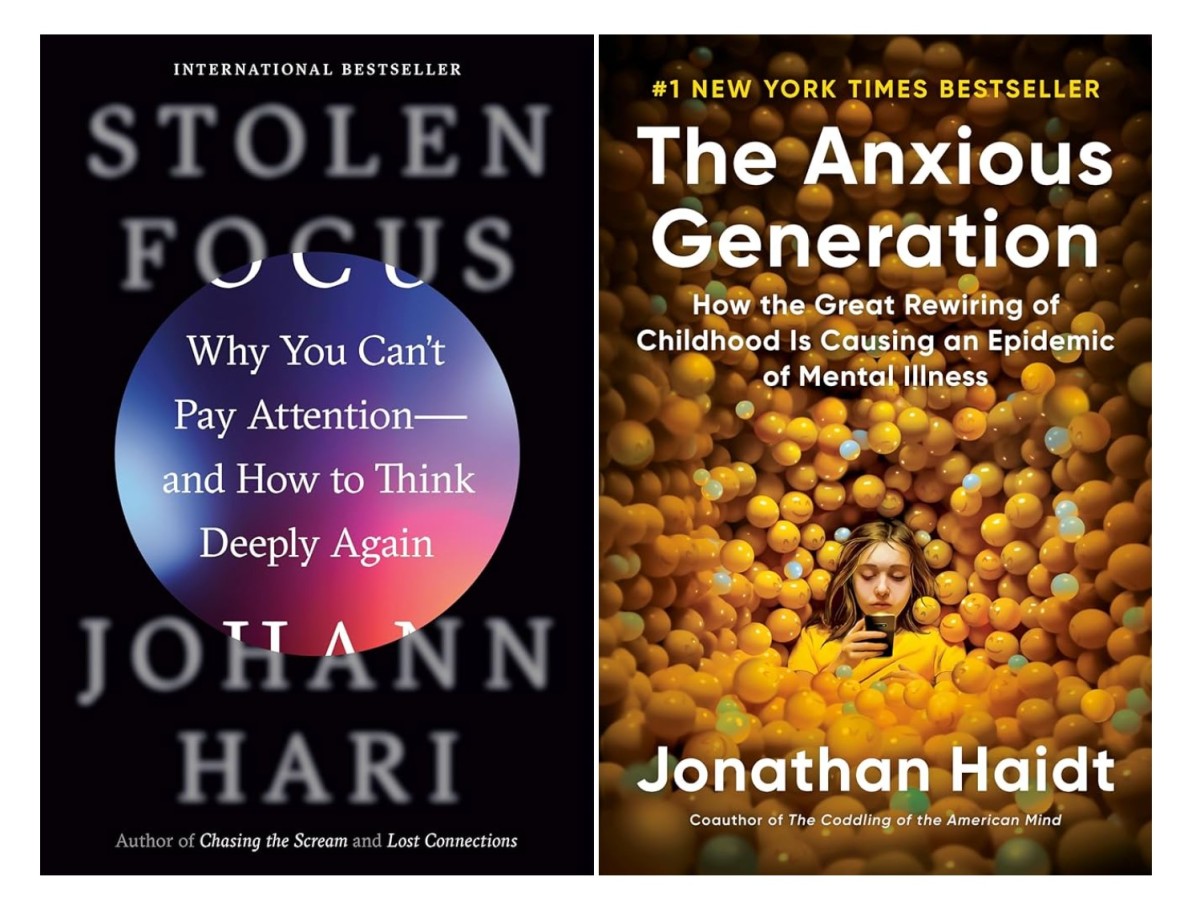

Lately, I’ve been encountering numerous warnings on the dangers of the internet and smartphones. Jonathan Haidt is promoting his new book The Anxious Generation. Even though it’s about how there’s increase mental illness in young girls using smartphones, I think it might tangentially apply to an old guy like me too.

Haidt was inspired to write his book because of reports about the sharp rise in mental illness in young people since 2010. That was just after the invention of the iPhone and the beginnings of social media apps. Recent studies show a correlation between the use of social media on smartphones and the increase reports of mental illness in young girls. I’m not part of Haidt’s anxious generation, but I do wonder if the internet, social media, and smartphones are affecting us old folks too.

Johann Hari’s book, Stolen Focus, is about losing our ability to pay attention, which does affect me. I know I have a focusing problem. I can’t apply myself like I used to. For years, I’ve been thinking it was because I was getting old. Now I wonder if it’s not the internet and smartphones. Give me an iPhone and a La-Z-Boy and I’m a happy geezer but not a productive one.

So, I’ve decided to test myself. I deleted Facebook and about twenty other apps from my iPhone. All the ones that keep me playing on my phone rather than doing something else. I didn’t quit Facebook, or other social media accounts, just deleted the apps off my phone. I figure if I need to use them, I’ll have to get my fat ass out of my La-Z-Boy and go sit upright at my desktop computer.

This little experiment has had an immediate impact — withdrawal symptoms. Without Facebook, YouTube, and all the other apps I kept playing with all day long, I sit in my La-Z-Boy thinking, “What can I do?” I rationalized that reading the news is good, but then I realized that I had way too many news apps. With some trepidation, I deleted The Washington Post, Ground News, Feedly, Reddit, Instapaper, and other apps, except for The New York Times and Apple News+.

I had already deleted Flipboard because it was one huge clickbait trap, but couldn’t that also be true of other news apps? They all demand our attention. When does keeping current turn into a news addiction? What is the minimum daily requirement of news to stay healthy and informed? What amount constitutes news obesity?

I keep picking up my iPhone wanting to do something with it, but there’s less and less to do. I kept The New York Times games app. I play Mini Crossword, Wordle, Connections, and Sudoku every morning. For now, I’m rationalizing that playing those games is exercise for my brain. They only take about 20-30 minutes total. And I can’t think of any non-computer alternatives.

I still use my iPhone for texting, phoning, music streaming, audiobooks, checking the weather, looking up facts, reading Kindle books, etc. The iPhone has become the greatest Swiss Army knife of useful tools ever invented. I don’t think I could ever give it up. Whenever the power goes out, Susan and I go through withdrawal anxiety. Sure, we miss electricity, heating, and cooling, but what we miss the most is streaming TV and the internet. We’ve experienced several three-day outages, and it bugs us more than I think it should.

One of the insights Jonathan Haidt provides is his story about asking groups of parents two questions?

- At what age were you allowed to go off alone unsupervised as a child?

- At what age did you let your children go off unsupervised?

The parents would generally say 5-7 for themselves, for 10-12 for their children. Kids today are overprotected, and smartphones let them retreat from the world even further. Which makes me ask: Am I retreating from the world when I use my smartphone or computer? Has the iPhone become like a helicopter parent that keeps me tied to its apron strings?

That’s a hard question to answer. Isn’t retiring a kind of retreat from the world? Doesn’t getting old make us pull back too? My sister offered a funny observation about life years ago, “We start off life in a bed in a room by ourselves with someone taking care of us, and we end up in bed in a room by ourselves with someone taking care of us.” Isn’t screen addiction only hurrying us towards that end? And will we die with our smartphones clutched tightly in our gnarled old fingers?

Is reading a hardback book any less real than reading the same book on my iPhone screen, or listening to it with earbuds and an iPhone? With the earbuds I can walk, work in the yard, or wash dishes while reading. Is reading The Atlantic from a printed magazine a superior experience than reading it on my iPhone with Apple News+?

Is looking at funny videos less of a life experience than playing with my cat or walking in the botanic gardens?

Haidt ends up advising parents to only allow children under sixteen to own a flip phone. He would prefer kids wait even longer to get a smartphone till they complete normal adolescent development, but he doesn’t think that will happen. I don’t think kids will ever go back to flip phones. The other day I noticed that one of the apps I had was recommended for age 4+ the App Store.

Are retired folks missing any kind of elder years of psychological development because we use smartphones? As a bookworm with a lifelong addiction to television and recorded music, how can I even know what a normal life would be like? I’m obviously not a hunter and gatherer human, or an agrarian human, or even a human adapted to industrialization. Is white collar work the new natural? Didn’t we live in nature too long ago for it to be natural anymore?

Aren’t we quickly adapting to a new hivemind way of living? Are the warnings pundits give about smartphones just identifying the side effects of evolving into a new human social structure? Is cyberization the new phase of humanity?

There were people who protested industrialization, but we didn’t reject it. Should we have? Now that there are people rejecting the hivemind, should we reject it too? Or jump in faster?

For days now I’ve been restless without my apps. I have been more active. I seeded my front lawn with mini clover and have been watering and watching it come in. I contracted to have our old bathtub replaced with a shower so it will be safer for Susan. I’ve been working with a bookseller to sell my old science fiction magazines. And I’ve been trying to walk more. However, I’ve yet to do the things I hoped to do when I decided to give up my apps.

It’s hard to tell the cause of doing less later in life. Is it aging? Is it endless distractions? Is it losing the discipline of work after retiring? Before giving up all my apps, I would recline in my La-Z-Boy and play on my iPhone regretting I wasn’t doing anything constructive. Now I sit in my La-Z-Boy doing nothing and wonder why I’m not doing anything constructive. I guess it’s taken a long time to get this lazy, so it might take just as long to overcome that laziness.

JWH