by James Wallace Harris, Wednesday, June 12, 2019

Human memory is rather unreliable. What is seen and heard is never recalled perfectly. Over time what we do recall degrades. And quite often we can’t remember at all. What would our lives be like if our brains worked like computer hard drives?

Imagine that the input from our five senses could be recorded to files that are perfect digital transcriptions so when we play them back we’d see, hear, feel, taste, and touch exactly what we originally sensed?

Human brains and computers both seem to have two kinds of memory. In people, we call in short and long term memory. With computers, it’s working memory and storage.

My friend Linda recently attended her 50th high school reunion and met with about a dozen of her first-grade classmates. Most of them had few memories of that first year of school in September 1957. Imagine being able to load up a day from back then into working memory and then attend the reunion. Each 68-year-old fellow student could be compared to their 6-year-old version in great detail. What kind of emotional impact would that have produced compared to the emotions our hazy fragments of memory create now?

Both brains and hard drives have space limitations. If our brains were like hard drive, we’d have to be constantly erasing memory files to make room for new memory recordings. Let’s assume a hard drive equipment brain had room to record 100 days of memory.

If you lived a hundred years you could save one whole day from each year or about four minutes from every day for each year. What would you save? Of course, you’d sacrifice boring days to add their four minutes to more exciting days. So 100 days of memory sounds like both a lot and a little.

Can you think about what kind of memories you’d preserve? Most people would save the memory files of their weddings and the births of their children for sure, but what else would they keep? If you fell in love three times, would you keep memories of each time? If you had sex with a dozen different people, would you keep memories of all twelve? At what point would you need two hours for an exciting vacation and would be willing to erase the memory of an old friend you hadn’t seen in years? Or the last great vacation?

Somehow our brain does this automatically with its own limitations. We don’t have a whole day each year to preserve, but fleeting moments. Nor do we get to choose what to save or toss.

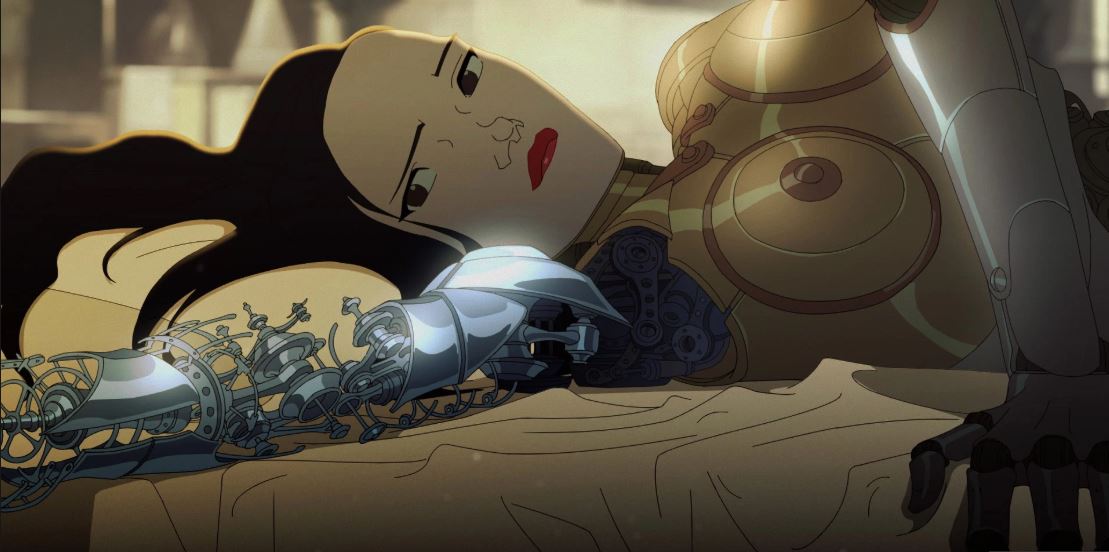

I got to thinking about this topic when writing a story about robots. They will have hard drive memories, and they will have to consciously decide what to save or delete. I realized they would even have limitations too. If they had 4K video cameras for eyes and ears, that’s dozens of megabytes of memory a second to record. Could we ever invent an SSD drive that could record a century of experience? What if robots needed one SSD worth of memory each day and could swap them out? Would they want to save 36,500 SDD drives to preserve a century of existence? I don’t think so.

Evidently, memory is not a normal aspect of reality in the same way intelligent self-awareness is rare. Reality likes to bop along constantly mutating but not remembering all its permutations. When Hindu philosophers teach us to Be Here Now, it’s both a rejection of remembering the past and anticipating the future.

Human intelligence needs memory. I believe sentience needs memory. Compassion needs memory. Think of people who have lost the ability to store memories. They live in the present but they’ve lost their identity. Losing either short or long term memory shatters our sense of self. The more I think about it, the more I realize the importance of memory to who we are.

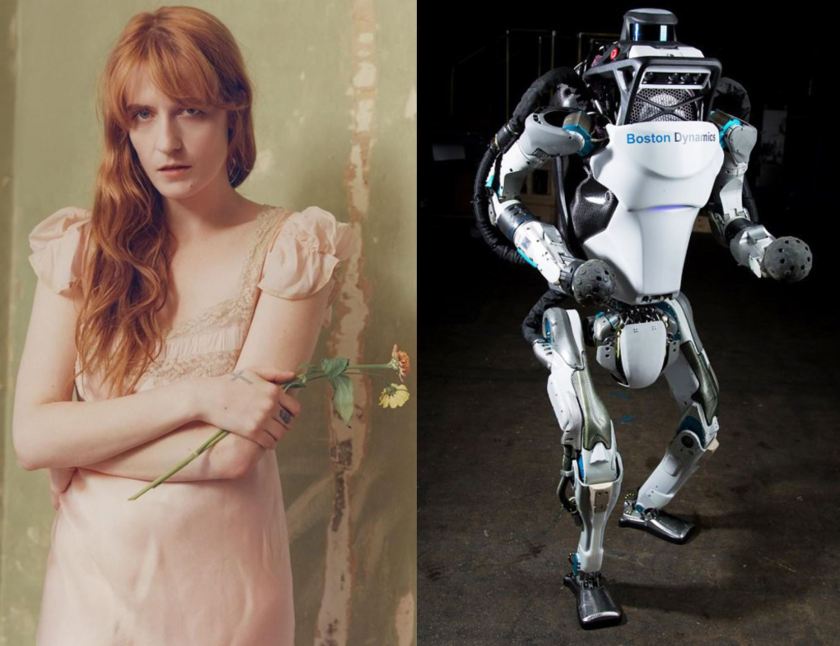

What if technology could graph hard drive connections to our bodies and we could store our memories digitally? Or, what if geneticists could give us genes to create biological memories that are almost as perfect? What new kinds of consciousness would having better memories produce? There are people now with near perfect memories, but they seem different. What have they lost and gained?

Time and time again science fiction creates new visions of Humans 2.0. Most of the time science fiction pictures our replacements with ESP powers. Comic books imagine mutants with super-powers. I’ve been wondering just what better memories would produce. I think a better memory system would be more advantageous than ESP or super-powers.

JWH