In the physical realm of reality, we humans have always believed we were the crown of creation, the smartest beings in a long chain of animal and plant creatures. Yet, as far back into history that we have memories, we have speculated about metaphysical beings that were far smarter than us, who had amazing abilities. God and gods, angels and devils, and a whole zoology of spiritual beings. Over the centuries of progress and the development of science, we have come to doubt the existence of such metaphysical beings. We’ve even asked ourselves, are we alone in the universe, and wondered if there are beings elsewhere in the vast multiverse that are as smart as we are, or even conscious of living in the multiverse.

Ancient Greeks speculated about life on distant worlds. They even imagined the universe composed of atoms and concluded our world must not be unique. Ever since then there have been people who thought about life on other worlds, or even the creation of better humans, or even the wilder ideas of the creation of smart machines and artificial life. We just don’t want to accept that we’re alone.

For most of history, most of humanity has assumed we’re not alone, that spiritual beings existed and they were superior to us. After the Renaissance and the Enlightenment, religious thinking decline and scientific thinking rose. Among the population, a growing number of us has come to accept that physical reality is the only reality. Instead of waiting for God to give us higher powers after we died, we started speculating on how we could give ourselves immortality, greater wisdom, and control over space and time, and we wondered more and more if there are other intelligent, self-aware creatures living in the universe with us. Slowly, a form of literature developed to support this speculation and it’s generally called science fiction.

In 1818 Mary Shelley spread the idea in her book Frankenstein; or, The Modern Prometheus that we could find the force that animated life and overcome death. That’s a very apt subtitle because Prometheus was a god that uplifted mankind. Mary Shelley also promoted another great science fictional concept in her 1826 book The Last Man, which speculated that our species could go extinct. If there is no God we must protect ourselves from extinction, and fight against death. But actually, we wanted more.

Then in 1895 H. G. Wells suggested to the world in his novel The Time Machine, that humanity could even devolve, as well as go extinct. Not only that, he showed how the Earth could die. This was all inspired from On the Origin of Species by Means of Natural Selection by Charles Darwin, which first appeared in 1859. In The Time Machine Wells imagined life in 802,701 A.D. Instead of picturing the obvious, a superior race of humans evolving, he envisions two species that had branched off from ours, and neither of which were superior. He hints that there were greater versions of humanity in between the times, but now we had devolved. At the end of the book, he suggests that humans devolve even further into mere creatures without any intelligence. This powerful speculative fiction defined the scope of humanity for us. We can become greater, lesser or ceased to exist.

Then in 1898 Wells gets the world to think about another brilliant science fictional idea, what if there are superior alien beings that can visit Earth and conquer us. In The War of the Worlds, intelligent beings come to exterminate humans. We don’t know how much more intelligent they are, but the Martians can build great machines and travel across space. There had been other books about alien invaders and time travel, but H. G. Wells made these ideas common speculation.

In 1911, J. D. Beresford published The Hampdenshire Wonder, a book about deformed child with a super powerful brain, a prodigy or wunderkind of amazing abilities. Beresford, his novel and ideas, were never as famous as his contemporary H. G. Wells, but The Wonder was an idea whose time had come. How much smarter could a human become? Readers of science fiction, and some people in the world at large were now wondering about the powers of the mind, as well as speculating about how powerful could alien minds be. Stories about robots had existed before now, as well as Frankenstein and Golem like creatures, but the public had not fixated on the idea of superior machine intelligence or artificial life. But we were on our way to imagining a superior man, a superman.

Prodigies were well known and speculated about, like musical prodigies and math geniuses, but Beresford suggests the human mind had a lot more potential in it. He also zooms in on the resentment factor.

The 1930 novel Gladiator by Philip Wylie suggests it’s possible to enhance humans with a serum to improve their physical strength. There is no scientific reasoning behind this, other than to suggest we could have the equivalent weight lifting power of an ant, and the jumping power of a grasshopper. All of this merely foreshadows Superman comics (1932). The theory behind Superman is he’s an alien with advanced powers and not an enhanced humans.

Comic books embraced the idea of super-heroes speculating about an endless variety of ways to get humans with more features and powers. Comics have never been very scientific, and instead copied the ideas and themes from ancient gods and goddesses. It’s all wish-fulfillment fantasies. People are exposed to radiation and lightening all the time and don’t mutate. However, all of this led to speculation about what humans could become, and how evolution might produce Homo Sapiens 2.0.

In 1935 Olaf Stapledon published Odd John: A Story Between Jest and Earnest. Stapledon went far beyond comics in his speculation about what a superman might be, how they would act, and how society would react. Science fiction is now seriously philosophizing about the future and potential of the human race.

Olaf Stapledon was a far reaching thinker and a serious science fiction writer. Last and First Men (1930) describes eighteen species of humans, while Star Maker (1937) tried to write a history of life in the universe. These books are not typical novels, but more like fictional narratives. The scope of Stapledon’s speculation was tremendous, and few science fiction writers have tempted to best him.

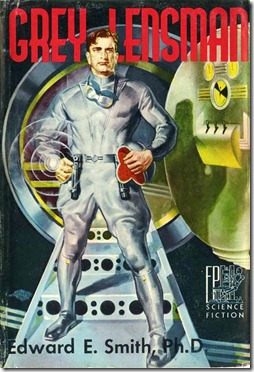

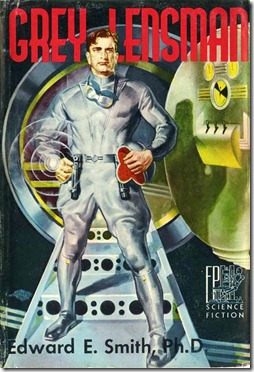

1930s science fiction was full of stories about accelerated evolution, such as “The Man Who Evolved” by Edmond Hamilton, and this culminated in the super-science stories of E. E. Smith and his Lensmen stories (1934-1948). Science fiction fans ate this stuff up, and many people consider the ideas in the Lensmen series as inspiration for the Star Wars series. The stories involve two super alien races fighting a galactic war over vast time scales using client races that they uplifted with knowledge and superior technology.

Smith ideas weren’t completely new, but he put them together in an exciting series that really jump-started the science fiction genre of the 1940s and 1950s. Smith presented the idea that aliens could be godlike or devilish in their abilities, wisdom and knowledge, and they could bestow great powers on those who follow them. The science behind all of this is hogwash. It’s pandering to ancient religious beliefs by presenting the same ideas in pseudo-science costumes.

Star Wars has the same exact appeal. Humans, especially adolescent boys, and now liberated girls, want power and adventure. These powers and adventures are no different from what Greek, Roman, Hindu and Norse gods experienced. The excitement of Golden Age Science Fiction from the pulp magazines of the 1940s and into the psycho-social science fiction of the 1950s represents the unleashing of great desires. Desires for immortality, of ruling the heavens, telepathy, telekinesis, teleportation, flying faster than light, becoming as all-knowing as God.

Starting in the 1950s, especially with movies, and expanding in the 1960s with television shows like Star Trek, these ideas became widely popular, almost universal, and during the next 50 years, they came to dominate the most popular films. There is a huge pent-up desire here for the fantastic and the transcendental through the powers of science.

Science fiction writers have often faced the challenge of presenting a super-advanced being, either a very evolved human, a powerful alien, or an AI being with vast intelligence as a character in their stories. Generally, the assumption is super-intelligence equals ESP like powers. How often in Outer Limits, Star Trek, Star Wars, or in written science fiction, have you seen a highly evolved human read minds, or move matter with thought, such as Valentine Michael Smith in Stranger in a Strange Land? This goes way back in science fiction. The same thing is true when aliens come down to visit in their flying saucers. If they are presented as from an ancient civilization, they might not even have bodies, but they can manipulated space and time at will.

Isn’t that all silly? How does higher IQ equal overcoming the physical laws of reality?

Back in 1961 Robert Heinlein suggests that a very ancient race of Martians had conquered space and time with their minds, and they taught their techniques to a normal human, as if it was no more difficult than learning yoga. Really? Is that believable? Well, science fiction fans ate this up too. And then in 1977 Star Wars suggested similar powers for the Jedi. Why do people want to believe thinking can be that powerful? Well obviously, they hoped to have such power.

Valentine Michael Smith could make objects move or disappear. He could kill people at will by sending them into another dimension. He also had fakir like control over his body that allowed him to hibernate and appear dead. He could also talk with ghosts. Heinlein gives us no reason how these wild talents developed, or how they could function within the rules of physics.

Like Luke Skywalker learning to use The Force, people hope to transcend their old way of being through will power. So far we haven’t had much luck with that concept. The next step is to invent machines that could enhance us.

In 1963, one of the classic episodes of the original Outer Limits has David McCallum, an ordinary miner, put into a mad scientist’s chamber and his body evolved with speeded up evolution. McCallum’s brain gets huge, he grows a sixth finger on each hand and his mental powers become enormous. This superman moves beyond love and hate and sees normal humans beneath his consideration.

This is a step beyond Heinlein. It suggests that evolution will eventually produce a smarter human. It gives us no reason why we should believe this. One real theory about why humans actually evolved was to adapt to climate change so we could survive in many different environments and climates. Humanity has faced all kinds of challenges and we’ve yet to morph into anything new yet.

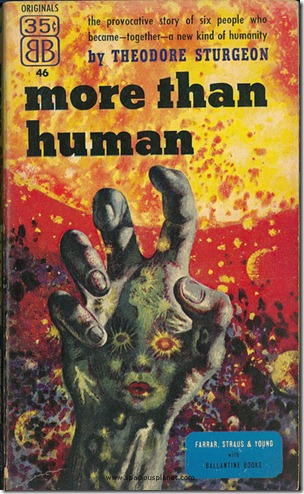

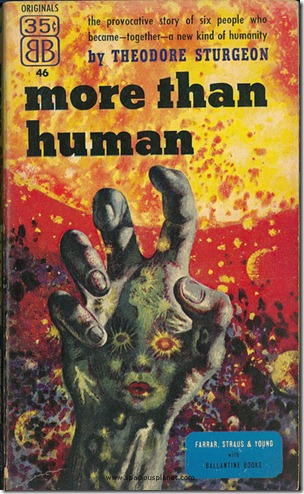

Back in 1953, Theodore Sturgeon proposed that mutations might exist in the population, in the case of More Than Human, suggested that six such individuals getting together to blend their talents into a gestalt consciousness. The first part of the story is called “The Fabulous Idiot” and reminds us we’ve long known about idiot savants that have wild talents. We have to give Sturgeon credit for sticking close to reality and not just making up some science fiction mumbo-jumbo, except that he suggests that misfits have ESP or telepathy, that darling concept of 1950s science fiction writers. Without telepathy we can’t create the gestalt.

There are humans with magnificent mental abilities, with photographic minds, wizards with numbers and math, but most of them have other weaknesses that keep them from being fully functional as social beings. There seems to be a problem with the human mind focusing to closely on any one talent at the expense of general abilities.

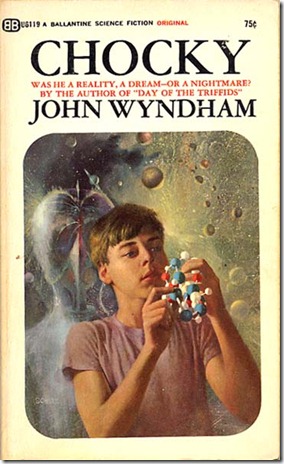

John Wyndham comes up with a solution of having an alien intelligence inhabit a boy. This is sort of a cheat don’t you think? Without explaining how an alien mind can occupy our mind and why it’s mind is superior, this is no more than waving a wand and saying, let it be so.

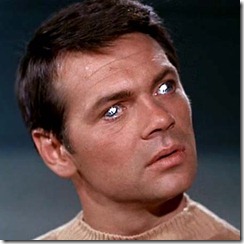

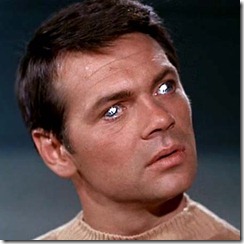

Star Trek explores accidently accelerated evolution when the Enterprise hits a magnetic storm on the edge of the galaxy and crewman Gary Mitchell develops godlike psionic powers. Like many stories about evolved beings, Gary becomes a threat to the normal people and feels no moral restraint about killing people. Heinlein presented Valentine Michael Smith as being just with his use of powers to disappear people, but Captain Kirk and Mr. Spock see Gary Mitchell as evil and must be destroyed.

This show was a second pilot for the original Star Trek series and Mr. Spock is very aggressive, brandishing a rather large and powerful phaser rifle. Later on Mr. Spock becomes the ideal of mental self-control and evolved being, but then he’s a Vulcan. The implication is control over feelings will lead to greater mental powers.

In 1965 Heinlein returns with a newer version of Mike from Stranger in a Strange Land. Once again, this Mike is an innocent, but a machine coming into consciousness. Once again he has to learn about how the world works and to develop his own talents. Being a machine he has new abilities that humans don’t and can’t have. Now we’re onto something. If we can’t evolve our brains, why not use our brains to build a better brain. Mike is a friendly computer, but many people fear this idea.

Just a year later, in 1966, D. J. Jones images the world controlled by two giant military computers. Of course, in 1983 the film War Games imagines another dangerous military computer with consciousness. This happens quite often in science fiction, uppity computers that must be outwitted by slower minded humans. We seldom get to explore the potential of a smart computer.

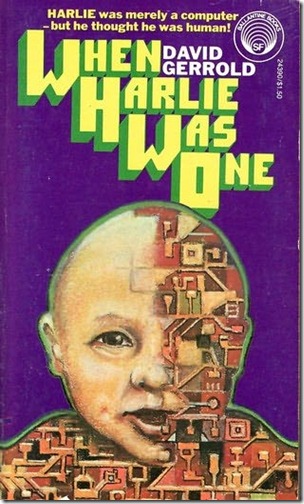

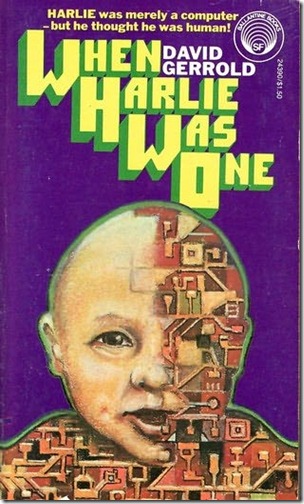

David Gerrold actually writes a science fiction novel that thoughtfully explores the idea of an emerging machine intelligence in 1972, and even speculates on many interesting ideas that eventually become part of the computer age, including computer viruses. Gerrold builds on what Heinlein started with The Moon is a Harsh Mistress.

In 1995, literary writer Richard Powers explores the idea of machine intelligence with Galatea 2.2, where scientists build a computer named Helen to understand English literature.

Then in 2009, Robert J. Sawyer began a trilogy about an emerging AI that evolves out of the Internet. Webmind, as it names itself. Webmind works hard not to be threatening and wants to help humanity.

Let’s imagine a Homo Sapiens 2.0, or BEM, or AI with an IQ of 1,000. I don’t know if that’s appropriate for the actual scale, but the highest IQ recorded are just over 200, so lets use 1,000 as a theoretical marker. Let’s imagine IBM’s Watson that had all that brainpower and more, so he/she was like a human with computer thinking speed and memory.

What would it mean to have an IQ of 1,000. It would mean the AI, Alien or Homo Sapiens 2.0 would think very very fast, remember incredibly well, and solve brain teasers faster than anyone on Earth. It wouldn’t mean it could read minds or move matter at will, although I’d expect it to deduce information about people like Sherlock Holmes.

Probably all math and physics would be a snap to such a being. In fact, it would think so fast and know so much that it might not find much of interest in reality. It wouldn’t know everything, but lets imagine it could consciously imagine calculations like those made in supercomputers to predict the weather, solve subatomic particle experiments or run the Wall Street Stock exchange.

What would such a being feel? How would it occupy its mind with creative pursuits?

We feel as humans at the crown of creation, that intelligence is the grand purpose of the universe, but when you start studying the multiverse, that might not be so. We’re just one of an infinity of creations. There might be limits to intelligence, like physical limits in the universe, like the speed of light.

Science fiction hasn’t begun to explore the possibilities of higher intelligence, but I do think there are limits of awareness, limits of thought and limits of intelligence. All too often science fiction has taken the easy way out and assumed higher intelligence equals godlike powers. What does it truly mean to know about every sparrow that falls from a tree? Is that possible?

Computers are teaching us a lot about intelligence. Up till now they show that brilliance is possible without awareness.

Science fiction has explored the nature of alien minds, machine minds and evolved human minds over and over, yet these explorations have come up with very little of substance. I often wonder if the universe doesn’t appear simple with only a moderate amount of intelligence, education and self-awareness. If we could couple the mind of a human with IBM’s Watson, the resulting mind might be smart enough to fully comprehend reality and build almost anything that needs to be built or invented. Such a being would know if it’s worth the effort to travel to the stars, or just sit and watch existence as it is.

JWH – 5/15/12